── Attaching core tidyverse packages ──────────────────────── tidyverse 2.0.0 ──

✔ dplyr 1.1.4 ✔ readr 2.1.5

✔ forcats 1.0.0 ✔ stringr 1.5.1

✔ ggplot2 3.5.0 ✔ tibble 3.2.1

✔ lubridate 1.9.3 ✔ tidyr 1.3.1

✔ purrr 1.0.2

── Conflicts ────────────────────────────────────────── tidyverse_conflicts() ──

✖ dplyr::filter() masks stats::filter()

✖ dplyr::lag() masks stats::lag()

ℹ Use the conflicted package (<http://conflicted.r-lib.org/>) to force all conflicts to become errors

At present, we have 1318 individuals processed and I am waiting on the final 120~ish to finish up this weekend. While these last few are processed and SNPs are called, I am starting to get them configured for analysis (as in actually being able to get answers to this tremendously drawn out process).

This is not the full data set, this is a random subset of the data that has been derived due to time constraints. These data consist of:

- A 5% random sample of each individuals sequences.

- Mapped onto a conservative de novo reference genome assembly that contains only 6,676 contigs. This reference genome was created from 2.5% random sample of each individuals genome as well. See this post for more about the assembly and why we chose to use this sized assembly as a reference.

Filtering

To start with, we need to filter the loci. In the current data, we have identified 19,879 sites of potential interest. Next, we filter these down by throwing away ones that do not conform to some rigid tests designed to minimize errors (sequencing errors, etc.). Using vcftools, the first pass of the data was done considering only SNP loci (no sites in insertion/deletion locations), sites with sufficiently high sequencing quality scores (to ensure confidence in identity of nucleotide called by the sequencing machine), sites that have at least 5 individuals possesing a copy of the minor allele (to ignore singletons and rare variants), and with enough sequencing depth (e.g., multiple sequences that are identical) to ensure that we’ve got good genomic regions that are free from any sequencing errors, and have only 2 alleles at the locus.

The table below shows how these filtering options reduced the number of potential sites.

| Filter | Sites |

|---|---|

| Raw | 19,879 |

| QUAL > 20 | 15,930 |

| MAC > 5 | 10,627 |

| Depth 10X | 1,004 |

| 2 - alleles | 926 |

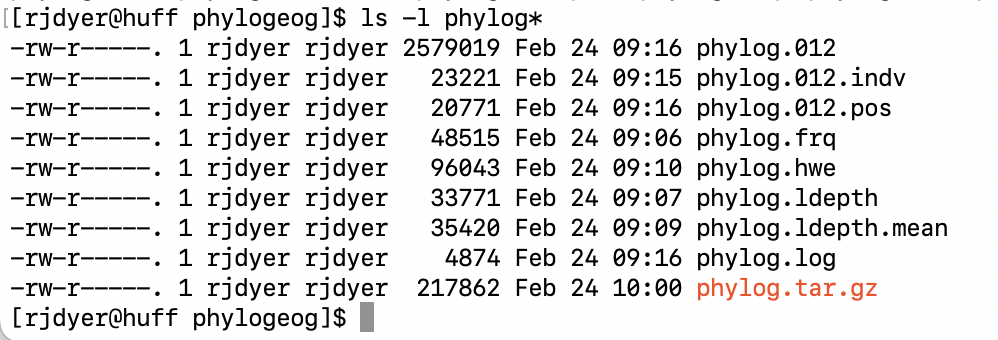

From these, I extracted the following derivative components. - site Allele frequencies - site Sequencing depth - site HWE estimates

Then I finished by exporting the filtered data in 012 allele format and imported it into R using gstudio.